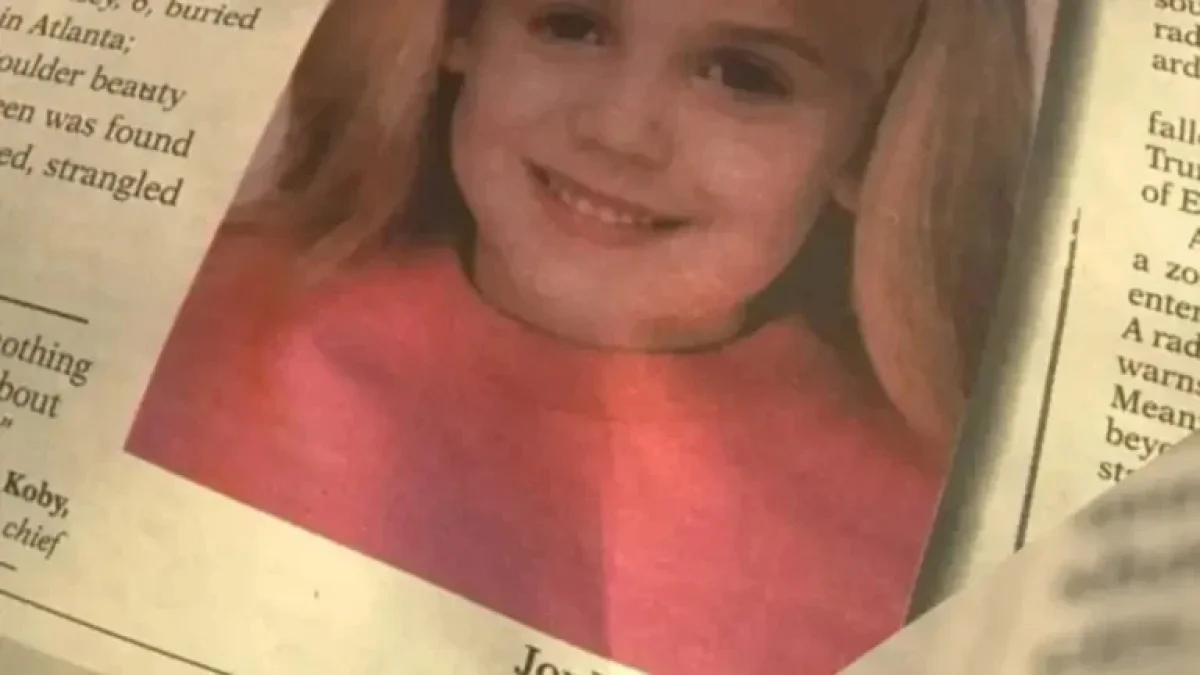

The recent TikTok video hoax linking JonBenet Ramsey to the Epstein files has sparked discussions about the dangers of AI deepfake misinformation and the importance of platform safety. John Ramsey, JonBenet's father, swiftly debunked the claim, emphasizing that the video was generated using artificial intelligence technology. Despite his denial, the speed at which the false information spread raises concerns about policy implications in the UK and potential risks for advertisers.

This incident has prompted investors to consider the implications for moderation costs, enforcement procedures, and brand safety risks associated with social media platforms and AI tools. Of particular interest are the questions surrounding moderation effectiveness, the speed of content removal, and the exposure of brands to potentially damaging misinformation. Understanding the repercussions of such hoaxes is crucial for navigating the evolving landscape of digital misinformation and its impact on various stakeholders.

The hoax not only sheds light on the challenges of combating misinformation but also underscores the need for stricter regulations and enhanced safety measures on social media platforms. The UK's Online Safety Act regime, designed to address illegal content and safeguard vulnerable users, places greater emphasis on the responsibility of platforms to mitigate risks and protect users from harmful content, including deceptive AI deepfake materials.

As platforms like TikTok grapple with the proliferation of misinformation, there is a growing expectation for enhanced content labeling, improved content verification processes, and increased transparency in content moderation practices. Regulators such as Ofcom are likely to demand robust risk assessments, clear reporting mechanisms, and efficient content removal procedures to combat deceptive content, like the JonBenet Ramsey Epstein files hoax, and prevent similar incidents in the future.

Monitoring key performance indicators cKPIsc such as median takedown times, the prevalence of removed content views, deepfake labeling rates, successful appeal reversals, and brand adjacency incidents can provide valuable insights into platform resilience and user safety measures. Advertisers are encouraged to tighten brand safety settings, implement stringent inventory filters, and collaborate with platforms that prioritize transparent risk assessments and demonstrate a commitment to improving harmful-view metrics over time.

Investors in the UK are advised to pay close attention to how platforms respond to misinformation incidents like the JonBenet Ramsey hoax, as these events can have significant repercussions on brand reputation, regulatory scrutiny, and operational costs. Platforms that proactively address misinformation risks, protect user safety, and exhibit lower exposure to harmful content will likely maintain higher advertiser demand and investor confidence.

In conclusion, the JonBenet Ramsey Epstein files hoax serves as a stark reminder of the challenges posed by AI deepfake misinformation and the critical importance of platform safety and regulatory compliance in the digital age. By staying vigilant, advocating for transparency, and fostering a culture of accountability, stakeholders can help mitigate the spread of harmful misinformation and build a more secure online environment for all users.